Fairer AI for long-term equity

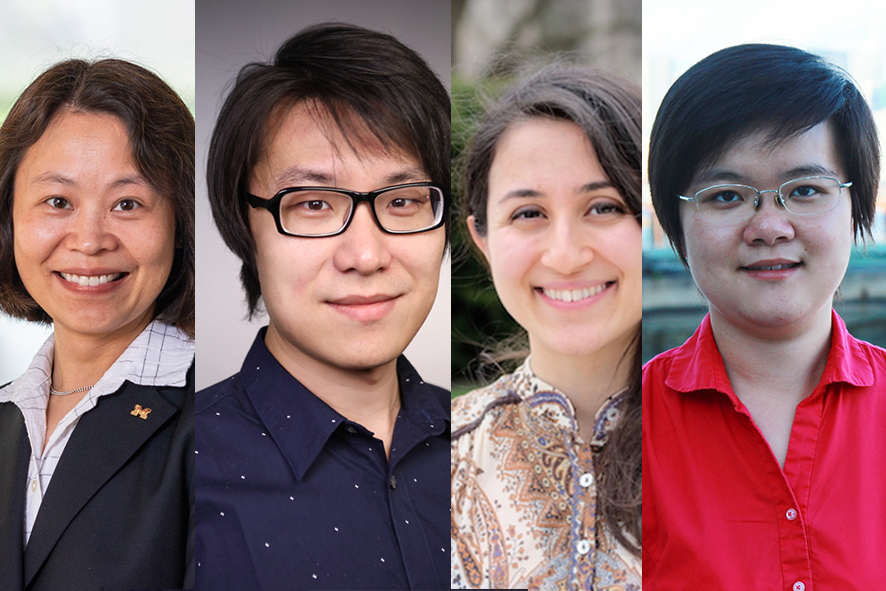

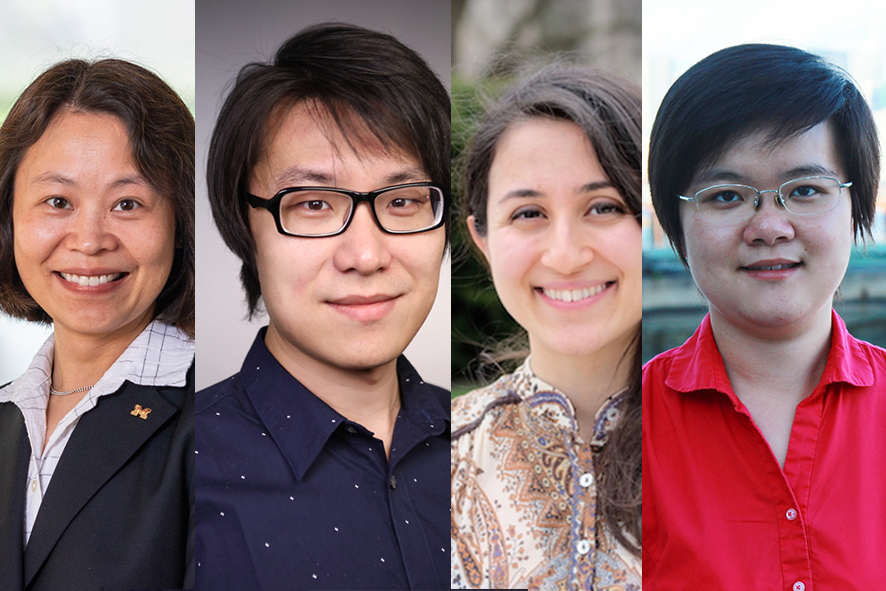

Prof. Mingyan Liu is a key member of a project to mitigate bias in Artificial Intelligence and Machine Learning systems for long-term equitable outcomes.

Enlarge

Enlarge

“There is an increasing awareness in the AI research community of the issue of bias,” says Mingyan Liu, the Peter and Evelyn Fuss Chair of ECE and one of the principal investigators on a new Fairness in AI grant, funded by NSF in partnership with Amazon. “There’s a lot we need to learn if we want solutions that achieve equity in the long-term.”

The project, based at UC Santa Cruz, aims to identify and mitigate bias in AI and Machine Learning systems to achieve long-lasting equitable outcomes. It focuses specifically on automated or algorithmic decision-making that still involves some level of human participation. This includes learning algorithms that are trained using data collected from humans, or algorithms that are used to make decisions that impact humans. Such algorithms make predictions based on observable features, which can then be used to evaluate college applications or recommend sentences in a court of law, for example.

However, since these algorithms are trained on data collected from populations, there are many examples where they’ve inherited pre-existing biases from datasets.

“We often have a lot of data for certain groups and less data for other groups, which is one of bias is created,” Liu says. “This bias means the product won’t work as well for the underrepresented groups, and this can be very dangerous.”

For example, facial recognition software is better at recognizing white faces than Black faces, which puts Black people more at risk for wrongful arrest. Job search platforms have been shown to rank less qualified male applicants higher than more qualified female applicants. Auto-captioning systems, which are important for accessibility, vary widely in accuracy depending on the accent of the speaker. Without mitigation, such bias in AI and ML systems can perpetuate or even worsen societal inequalities.

Enlarge

Enlarge

“Technical solutions have been proposed to guarantee equalized measures between protected groups when training a statistical model, but an often overlooked question is ‘what after?’” says project leader Yang Liu (PhD 2015), professor of computer science and engineering at UC Santa Cruz. “Once a model is deployed, it will start to impact a later decision and the society for a long period of time. Our team’s proposal aims to provide a systematic understanding and study of the dynamic interaction between machine learning and people so we can better understand the consequences to our society of the deployment of a machine learning algorithm.”

In addition to UC Santa Cruz and U-M, the project is a collaboration with Ohio State University and Purdue University. Professor Parinaz Naghizadeh (MS PhD EE 2013, 2016; MS Math 2014) is the OSU PI, and Professor Ming Yin is the Purdue PI. Both Y. Liu and Naghizadeh were advised by M. Liu at U-M.

MENU

MENU