Real-time monitor tracks the growing use of network filters for censorship

The team says their framework can scalably and semi-automatically monitor the use of filtering technologies for censorship at global scale.

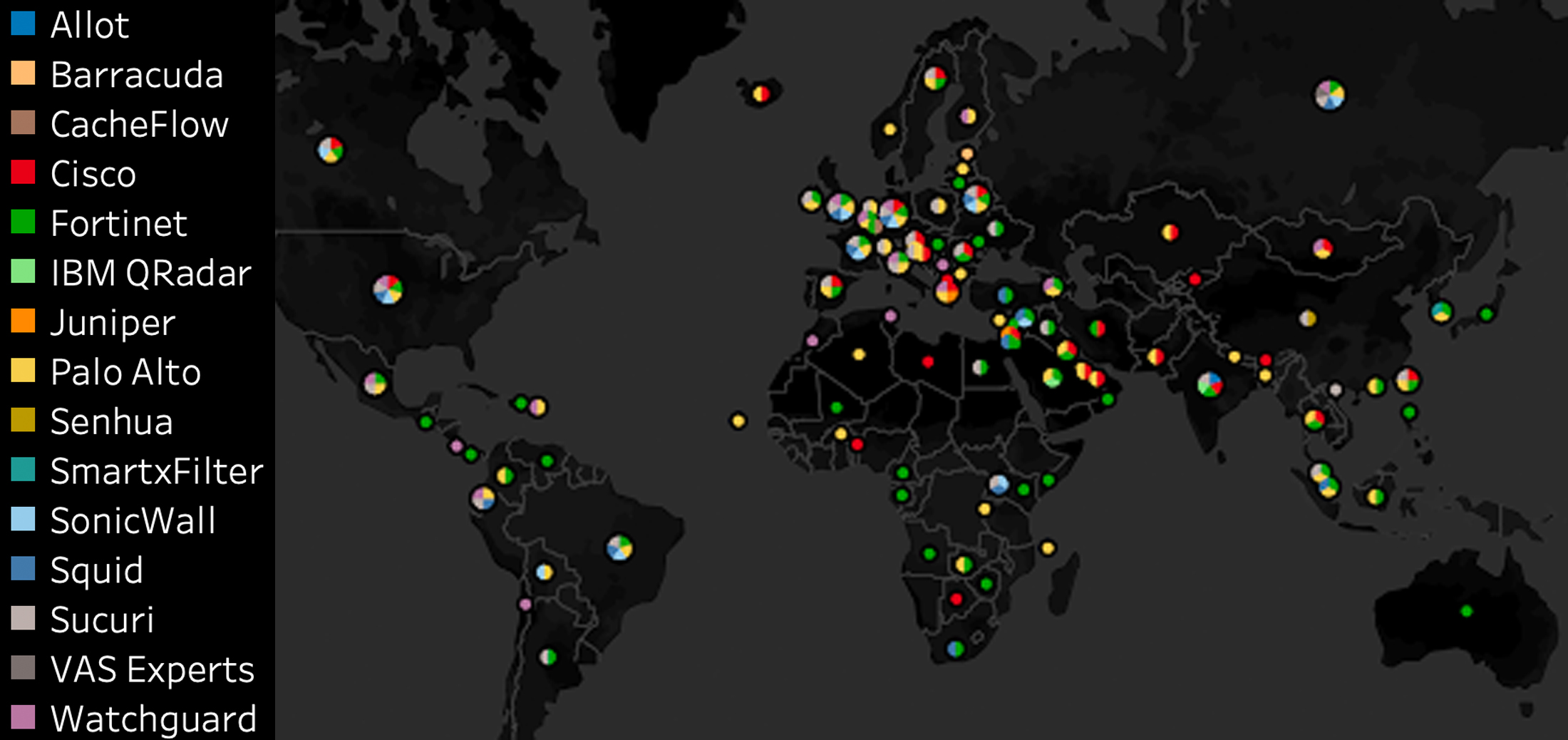

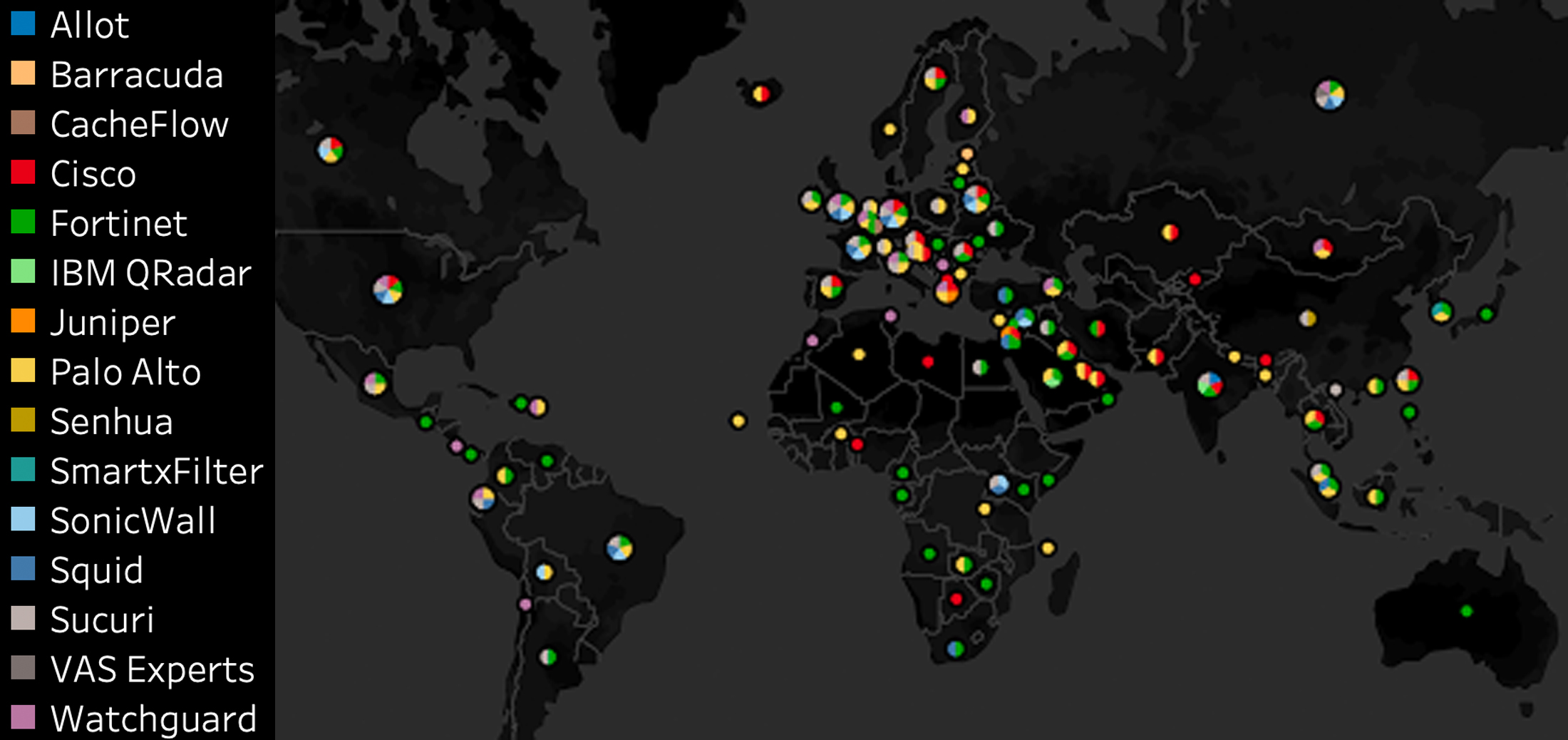

Researchers at the University of Michigan have discovered the widespread use of popular, commercial network filtering technologies for purposes of censorship in as many as 103 countries around the world. With a framework they call FilterMap, the researchers took advantage of several tools designed to detect censorship in real time to call attention to the proliferation of this technology for these unintended purposes.

Led by Prof. Roya Ensafi, the team says their framework can scalably and semi-automatically monitor the use of filtering technologies for censorship at global scale. They identified the use of 90 such filters, and are now able to continuously monitor their use. The study identified this type of censorship in 36 of the 48 countries identified by the Freedom House “Freedom on the Net” report as “Not Free” or “Partly Free.”

Typically these types of network filters, including products from such well-known commercial manufacturers as Palo Alto Networks and Cisco, enable particular workplace or academic networks to restrict access to content determined to be unsafe or inappropriate. Their application for purposes of general internet censorship qualifies the systems as a type of “dual-use technology.”

“Filters are increasingly being used by network operators to control their users’ communication,” Ram Sundara Raman, a CSE PhD student on the project, writes in a blog post, “most notably for censorship and surveillance.”

It was this behavior that the team set out to identify and track. The work was motivated by previous instances of censorship monitors prompting changed behavior from companies selling filter products, according to Sundara Raman. He cites the successful case of the University of Toronto’s Citizen Lab leading an investigation that changed the filtering behaviors of Canadian vendor Netsweeper.

Now, Sundara Raman and his colleagues are hoping to drive similar results by making the identification of filter use both easier and more automated. Until now, he writes, measuring the deployment of filters has required manually identifying a unique signature for a particular filter product, which in turn requires both physical access to a sample product and the assistance of on-the-ground collaborators in pertinent countries. Researchers would then have to perform network scans using that signature meaning a sustainable system would need to be in place to allow monitoring to continue.

“The censorship measurement community has only identified a handful of filters over the years.”

But with FilterMap, the team has already identified the use of 90 filters. And on top of that, while 70 were detected in a large-scale measurement in October 2018, the remaining 20 were added over the course of 3 months of longitudinal measurements. The latter detections indicate that the system is able to monitor filter-based censorship continuously.

The system makes use of data compiled by the group’s previously released global censorship monitor, Censored Planet, and the Open Observatory of Network Interference (OONI). With these datasets, they collected clusters of blockpages that all shared certain unique identifiers. It was these identifiers, or signatures, that linked blocking activity to the use of a particular network filter.

Of the 90 signatures identified by the researchers, 15 were tied to commercialized vendors, mostly headquartered in the United States. The remaining were products of particular actors, such as governments, ISPs, or organizations.

Enlarge

Enlarge

“These filters are located in many locations in 103 countries,” Sundara Raman writes, “revealing the diverse and widespread use of content filtering technologies. We observed blockpages in 14 languages.”

The blocked content typically cited a legal concern, with pornography and gambling websites making up those most commonly blocked by these filters. Others included websites featuring provocative attire and anonymization tools.

In light of these discoveries, the team hopes filter developers will take steps to address these secondary uses of their products.

“The inability to monitor the proliferation of filters more broadly withholds researchers, regulators, and citizens from efficiently discovering and responding to the misuse of these ‘dual-use technologies,’” Sundara Raman writes. “Our continued publication of FilterMap data will help the international community track the scope, scale and evolution of content-based censorship.”

The researchers present their findings in the paper “Measuring the Deployment of Network Censorship Filters at Global Scale” at the 2020 Network and Distributed System Security Symposium (NDSS).

MENU

MENU