Thomas Chen earns NSF Graduate Research Fellowship for research in artificial neural networks for computer vision

Thomas and his group are working to improve upon artificial neural network design through a process called sparse coding.

Enlarge

Enlarge

Thomas Chen has been awarded an NSF Fellowship as part of the Graduate Research Fellowship Program to pursue his research in the design of efficient artificial neural networks for computer vision.

Computer vision is used in a wide range of applications, including mobile devices and sensor nodes for context-aware image recognition, security cameras, and micro unmanned aerial vehicles (UAV) for wide-area surveillance. Better understanding of human vision processes and neural networks has led to advances in the design of algorithms used in these applications.

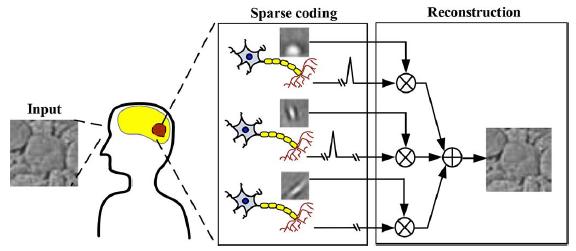

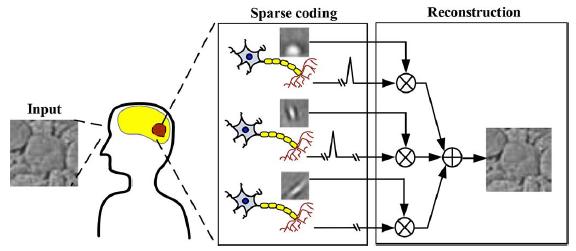

One important difference between the way human neural networks and computers work is the way they process information. Neural networks operate in parallel to solve problems, whereas computers operate through a series of single instructions.

Implementing artificial neural networks on a computer for practical problems has not shown great promise due to interconnect complexities and the need for extremely high memory bandwidth.

Thomas and his group are working to improve upon artificial neural network design through a process called sparse coding. Basically, if you keep the activity of the artificial neural network very sparse, sending only very important information, then you don’t need to process as much information at a time, which enhances efficiency and saves power.

Thomas and his group were able to design custom hardware architectures for efficient and high-performance implementations of a sparse coding algorithm called the sparse and independent local network (SAILnet).

This research was published in the paper, Efficient Hardware Architecture for Sparse Coding, by Jung Kuk Kim, Phil Knag, Thomas Chen, and Zhengya Zhang, IEEE Transactions on Signal Processing, Vol. 62, no. 16, August 15, 2014.

Building upon this work, Thomas and the group developed a 65nm CMOS ASIC to implement feature extraction using bus-ring architecture. Feature extraction is a key problem in image processing, involving the identification of edges and regions in an image. Their final chip took up an area of 3.06 mm2, and achieved an inference throughput of 1.24Gpixel per second at 1.0V and 310MHz.

Enlarge

Enlarge

The group presented their results at the 2014 IEEE Symposium on VLSI Circuits, and subsequently published the paper, A Sparse Coding Neural Network ASIC With On-Chip Learning for Feature Extraction and Encoding, by Phil Knag, Jung Kuk Kim, Thomas Chen, and Zhengya Zhang, IEEE Journal of Solid-State Circuits, Vol. 50, no. 4, April 2015.

Thomas is a 2nd year electrical engineering Ph.D. student working under the direction of Prof. Zhengya Zhang. He received his bachelor’s degree from the University of Michigan in 2013, specializing in VLSI design.

His research interests are in high-speed and low-power VLSI circuits and systems. He enjoys the way his current research touches on neural science, digital signal processing, and VLSI all at the same time. He also enjoys the combination of hands-on work with programming that comes with his research.

MENU

MENU